Or “How I learned to start worrying and never trust the cloud.”

The Clouderati have been derping for some time now about how we’re all going towards the public cloud and “private cloud” will soon become a distant, painful memory, much like electric generators filled the gap before power grids became the norm. They seem far too glib about that prospect, and frankly, they should know better. When the Clouderati see the inevitability of the public cloud, their minds lead to unicorns and rainbows that are sure to follow. When I think of the inevitability of the public cloud, my mind strays to “The Empire Strikes Back” and who’s going to end up as Han Solo. When the Clouderati extol the virtues of public cloud providers, they prove to be very useful idiots advancing service providers’ aims, sort of the Lando Calrissians of the cloud wars. I, on the other hand, see an empire striking back at end users and developers, taking away our hard-fought gains made from the proliferation of free/open source software. That “the empire” is doing this *with* free/open source software just makes it all the more painful an irony to bear.

I wrote previously that It Was Never About Innovation, and that article was set up to lead to this one, which is all about the cloud. I can still recall talking to Nicholas Carr about his new book at the time, “The Big Switch“, all about how we were heading towards a future of utility computing, and what that would portend. Nicholas saw the same trends the Clouderati did, except a few years earlier, and came away with a much different impression. Where the Clouderati are bowled over by Technology! and Innovation!, Nicholas saw a harbinger of potential harm and warned of a potential economic calamity as a result. While I also see a potential calamity, it has less to do with economic stagnation and more to do with the loss of both freedom and equality.

The virtuous cycle I mentioned in the previous article does not exist when it comes to abstracting software over a network, into the cloud, and away from the end user and developer. In the world of cloud computing, there is no level playing field – at least, not at the moment. Customers are at the mercy of service providers and operators, and there are no “four freedoms” to fall back on.

When several of us co-founded the Open Cloud Initiative (OCI), it was with the intent, as Simon Phipps so eloquently put it, of projecting the four freedoms onto the cloud. There have been attempts to mandate additional terms in licensing that would force service providers to participate in a level playing field. See, for example, the great debates over “closing the web services loophole” as we called it then, during the process to create the successor to the GNU General Public License version 2. Unfortunately, while we didn’t yet realize it, we didn’t have the same leverage as we had when software was something that you installed and maintained on a local machine.

The Way to the Open Cloud

Many “open cloud” efforts have come and gone over the years, none of them leading to anything of substance or gaining traction where it matters. Bradley Kuhn helped drive the creation of the Affero GPL version 3, which set out to define what software distribution and conveyance mean in a web-driven world, but the rest of the world has been slow to adopt because, again, service providers have no economic incentive to do so. Where we find ourselves today is a world without a level playing field, which will, in my opinion, stifle creativity and, yes, innovation. It is this desire for “innovation” that drives the service providers to behave as they do, although as you might surmise, I do not think that word means what they think it means. As in many things, service providers want to be the arbiters of said innovation without letting those dreaded freeloaders have much of a say. Worse yet, they create services that push freeloaders into becoming part of the product – not a participant in the process that drives product direction. (I know, I know: yes, users can get together and complain or file bugs, but they cannot mandate anything over the providers)

Most surprising is that the closed cloud is aided and abetted by well-intentioned, but ultimately harmful actors. If you listen to the Clouderati, public cloud providers are the wonderful innovators in the space, along with heaping helpings of concern trolling over OpenStack’s future prospects. And when customers lose because a cloud company shuts its doors, the clouderati can’t be bothered to bring themselves to care: c’est la vie and let them eat cake. The problem is that too many of the clouderati think that Innovation! is a means to its own ends without thinking of ground rules or a “bill of rights” for the cloud. Innovation! and Technology! must rule all, and therefore the most innovative take all, and anything else is counter-productive or hindering the “free market”. This is what happens when the libertarian-minded carry prejudiced notions of what enabled open source success without understanding what made it possible: the establishment and codification of rights and freedoms. None of the Clouderati are evil, freedom-stealing, or greedy, per se, but their actions serve to enable those who are. Because they think solely in terms of Innovation! and Technology!, they set the stage for some companies to dominate the cloud space without any regard for establishing a level playing field.

Let us enumerate the essential items for open innovation:

- Set of ground rules by which everyone must abide, eg. the four freedoms

- Level playing field where every participant is a stakeholder in a collaborative effort

- Economic incentives for participation

These will be vigorously opposed by those who argue that establishing such a list is too restrictive for innovation to happen, because… free market! The irony is that establishing such rules enabled Open Source communities to become the engine that runs the world’s economy. Let us take each and discuss its role in creating the open cloud.

Ground Rules

We have already established the irony that the four freedoms led to the creation of software that was used as the infrastructure for creating proprietary cloud services. What if the four freedoms where tweaked for cloud services. As a reminder, here are the four freedoms:

- The freedom to run the program, for any purpose (freedom 0).

- The freedom to study how the program works, and change it so it does your computing as you wish (freedom 1).

- The freedom to redistribute copies so you can help your neighbor (freedom 2).

- The freedom to distribute copies of your modified versions to others (freedom 3).

If we rewrote this to apply to cloud services, how much would need to change? I made an attempt at this, and it turns out that only a couple of words need to change:

- The freedom to run the program or service, for any purpose (freedom 0).

- The freedom to study how the service works, and change it so it does your computing as you wish (freedom 1).

- The freedom to implement and redistribute copies so you can help your neighbor (freedom 2).

- The freedom to implement your modified versions for others (freedom 3).

Freedom 0 adds “or service” to denote that we’re not just talking about a single program, but a set of programs that act in concert to deliver a service.

Freedom 1 allows end users and developers to peak under the hood.

Freedom 2 adds “implement and” to remind us that the software alone is not much use – the data forms a crucial part of any service.

Freedom 3 also changes “distribute copies of” to “implement” because of the fundamental role that data plays in any service. Distributing copies of software in this case doesn’t help anyone without also adding the capability of implementing the modified service, data and all.

Establishing these rules will be met, of course, with howls of rancor from the established players in the market, as it should be.

Level Playing Field

With the establishment of the service-oriented freedoms, above, we have the foundation for a level playing field with actors from all sides having a stake in each other’s success. Each of the enumerated freedoms serves to establish a managed ecosystem, rather than a winner-take-all pillage and plunder system. This will be countered by the argument that if we hinder the development of innovative companies won’t we a.) hinder economic growth in general and b.) socialism!

In the first case, there is a very real threat from a winner-take-all system. In its formative stages, when everyone has the economic incentive to innovate (there’s that word again!), everyone wins. Companies create and disrupt each other, and everyone else wins by utilizing the creations of those companies. But there’s a well known consequence of this activity: each actor will try to build in the ability to retain customers at all costs. We have seen this happen in many markets, such as the creation of proprietary, undocumented data formats in the office productivity market. And we have seen it in the cloud, with the creation of proprietary APIs that lock in customers to a particular service offering. This, too, chokes off economic development and, eventually, innovation. At first, this lock in happens via the creation of new products and services which usually offer new features that enable customers to be more productive and agile. Over time, however, once the lock-in is established, customers find that their long-term margins are not in their favor, and moving to another platform proves too costly and time-consuming. If all vendors are equal, this may not be so bad, because vendors have an incentive to lure customers away from their existing providers, and the market becomes populated by vendors competing for customers, acting in their interest. Allow one vendor to establish a larger share than others, and this model breaks down. In a monopoly situation, the incumbent vendor has many levers to lock in their customers, making the transition cost too high to switch to another provider. In cloud computing, this winner-take-all effect is magnified by the massive economies of scale enjoyed by the incumbent providers. Thus, the customer is unable to be as innovative as they could be due to their vendor’s lock-in schemes. If you believe in unfettered Innovation! at all costs, then you must also understand the very real economic consequences of vendor lock-in. By creating a level playing field through the establishment of ground rules that ensure freedom, a sustainable and innovative market is at least feasible. Without that, an unfettered winner-take-all approach will invariably result in the loss of freedom and, consequently, agility and innovation.

Economic Incentives

This is the hard one. We have already established that open source ecosystems work because all actors have an incentive to participate, but we have not established whether the same incentives apply here. In the open source software world, developers participate because they had to, because the price of software is always dropping, and customers enjoy open source software too much to give it up for anything else. One thing that may be in our favor is the distinct lack of profits in the cloud computing space, although that changes once you include services built on cloud computing architectures.

If we focus on infrastructure as a service (IaaS) and platform as a service (PaaS), the primary gateways to creating cloud-based services, then the margins and profits are quite low. This market is, by its nature, open to competition because the race is on to lure as many developers and customers as possible to the respective platform offerings. However, the danger becomes if one particular service provider is able to offer proprietary services that give it leverage over the others, establishing the lock-in levers needed to pound the competition into oblivion.

In contrast to basic infrastructure, the profit margins of proprietary products built on top of cloud infrastructure has been growing for some time, which incentivizes the IaaS and PaaS vendors to keep stacking proprietary services on top of their basic infrastructure. This results in a situation where increasing numbers of people and businesses have happily donated their most important business processes and workflows to these service providers. If any of them are to grow unhappy with the service, they cannot easily switch, because no competitor would have access to the same data or implementation of that service. In this case, not only is there a high cost associated with moving to another service, there is the distinct loss of utility (and revenue) that the customer would experience. There is a cost that comes from entrusting so much of your business to single points of failure with no known mechanism for migrating to a competitor.

In this model, there is no incentive for service providers to voluntarily open up their data or services to other service providers. There is, however, an incentive for competing service providers to be more open with their products. One possible solution could be to create an Open Cloud certification that would allow services that abide by the four freedoms in the cloud to differentiate themselves from the rest of the pack. If enough service providers signed on, it would lead to a network effect adding pressure to those providers who don’t abide by the four freedoms. This is similar to the model established by the Free Software Foundation and, although the GNU people would be loathe to admit it, the Open Source Initiative. The OCI’s goal was to ultimately create this, but we have not yet been able to follow through on those efforts.

Conclusion

We have a pretty good idea why open source succeeded, but we don’t know if the open cloud will follow the same path. At the moment, end users and developers have little leverage in this game. One possibility would be if end users chose, at massive scale, to use services that adhered to open cloud principles, but we are a long way away from this reality. Ultimately, in order for the open cloud to succeed, there must be economic incentives for all parties involved. Perhaps pricing demands will drive some of the lower rung service providers to adopt more open policies. Perhaps end users will flock to those service providers, starting a new virtuous cycle. We don’t yet know. What we do know is that attempts to create Innovation! will undoubtedly lead to a stacked deck and a lack of leverage for those who rely on these services.

If we are to resolve this problem, it can’t be about innovation for innovation’s sake – it must be, once again, about freedom.

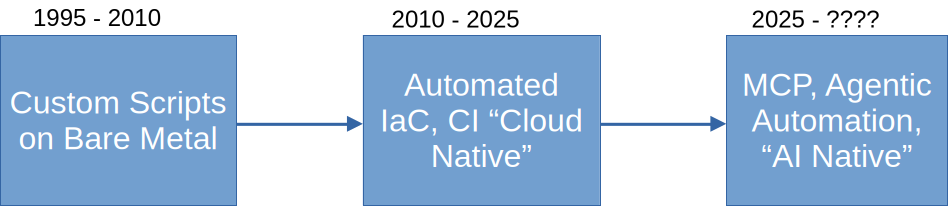

Considering that a lot of open source time and effort goes into infrastructure tooling – Kubernetes, OpenTofu, etc – one might draw the conclusion that, because these many of these tools are about to be disrupted and made obsolete, therefore “OMG Open Source is over!!11!!1!!”. Except, my conclusion is actually the opposite of that. I’ll explain.

Considering that a lot of open source time and effort goes into infrastructure tooling – Kubernetes, OpenTofu, etc – one might draw the conclusion that, because these many of these tools are about to be disrupted and made obsolete, therefore “OMG Open Source is over!!11!!1!!”. Except, my conclusion is actually the opposite of that. I’ll explain.